Welcome to this article, where we will try to understand serverless architecture together. We will dive into its essence, understand the fundamental problems it solves, explore the underlying principles, understand the cold start, and challenge the notion of vendor lock-in as a potential drawback. Moreover, we will examine scenarios where serverless shines and when it might not be the perfect solution.

I hope by the end of this article, you will gain some understanding of serverless architecture, empowering you to make informed decisions about its adoption and leverage its benefits. So let’s start.

What is Serverless Architecture?

Serverless architecture is a cloud service model where you can build and deploy applications without the need to manage or worry about the underlying infrastructure.

In a serverless setup, the cloud provider takes care of infrastructure provisioning, scaling, security, etc. allowing businesses to focus solely on writing only needed code and delivering business logic.

In the context of a web application, the traditional approach entails a multitude of concerns, such as load balancing, rate limiting, provisioning, capacity planning, resiliency, redundancy, monitoring, alerting, and many more. However, by adopting a serverless architecture, the cloud service provider can seamlessly manage all of these responsibilities. And you only pay for the resources you actually utilize, resulting in cost optimization and resource efficiency.

What problem does it solve?

Beyond the benefits of ease of setup, pay-as-you-use pricing, and the ability to focus on core business logic serverless architecture solves different problems and challenges for different people.

For Startups:

Addresses the hurdles of limited experience and operational management or overhead.

Time to market: helps startups to bring the solution into market faster by helping them to execute and iterate over the ideas faster

Provides a competitive advantage, allowing startups to move even with a smaller team of developers.

Saves from the huge amount of upfront cost of settings up the infrastructure and hiring the right resources to manage it which are hard to find anyway

For Enterprise:

Enterprise organizations place significant emphasis on compliance and infrastructural security, making them paramount concerns. In fact, this is one of the reasons why major enterprises, including banks, are increasingly adopting serverless architecture. By leveraging cloud service providers like AWS, these enterprises can confidently offload the responsibility of infrastructure such as servers and operating systems. related security. This shift enables enterprises to align with stringent compliance requirements and leverage the robust security measures implemented by the cloud service provider.

Thinking of Serverless

Let us now discuss a little more why and how we should think of serverless while developing any application.

For this, I consider two important points of strategy which are

Codes are liability

For the end user of your application, your code does not matter

I understand these are bold statements but understanding the implications is crucial. The more code you have to write, the greater the investment required for its maintenance, support, and security. Codes serve as a means to an end rather than an end in itself. As a business owner or entrepreneur, your primary focus lies on your product and meeting the needs of your customers.

Consider a scenario for a REST API application. It does not really matter if you have written a very good authentication service after investing 6 months of time while you could use the cognito service of aws alternatively which takes care of 90% of the thing and you would only need to work on maybe 10% of customization.

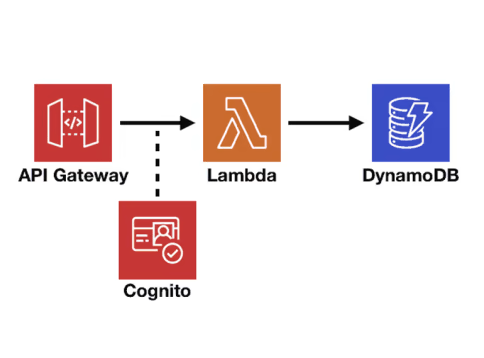

The above example depicts the architecture of a REST API. Here we leverage various AWS services to achieve optimal functionality. AWS API Gateway serves as the entry point, offering exceptional performance scalability, rate limiting, enhanced security measures and routing capabilities. For authentication purposes, we utilize AWS Cognito, a reliable service that simplifies the authentication and authorization process. Data storage is efficiently handled by DynamoDB, a fully managed NoSQL database service. Lastly, the core business logic is executed using AWS Lambda(your CRUD), enabling efficient and scalable execution without the need for provisioning or managing servers. You are only needed to write code specific to your business logic and serverless architecture removes many noises which would be present otherwise.

Although real-world applications present more complex scenarios, still the serverless architecture can greatly simplify the development process. By leveraging serverless services from cloud providers, you can offload the heavy lifting and instead focus on implementing your core business logic.

In the serverless thinking approach, the emphasis shifts towards utilizing pre-existing services offered by cloud service providers rather than reinventing the wheel and developing those services from scratch. For example, running and managing Kafka, rabbitmq yourself or can you use AWS SQS, SNS, event bridge, or kinesis which are scalable and highly available out of the box?

Now that we have gained an understanding of serverless architecture thinking, it’s time to delve into one of the common concerns raised against it: vendor locking.

Is Vendor Locking Really a Concern?

One of the primary concerns associated with vendor lock-in in serverless architecture is the potential for sudden cost increases, say 10X, imposed by cloud service providers.

Considering the case of AWS in my understanding there has never seen an abrupt price increase in the last 10 years. Sometimes new SKUs (e.g. new EC2 instance types) may be more expensive than older instance types but that is mostly because they are faster or more capable and the price is reflective of the increased performance. Due to the market competition and commitment to offering low-cost solutions, the prices have been reduced. Read more at the link in the references section. (AWS has reduced prices 107 times since it was launched in 2006.)

The fact that the price reduction is seen as contrary to the hypothetical argument and that’s why it’s also termed a lazy argument.

Another scenario to consider in the potential lock-in is that it also occurs when committing to a specific web framework, programming language, or database technology. When building an application, the choice of these technologies creates a level of dependency and can lead to lock-in challenges.

For instance, if a web framework becomes outdated or is no longer supported, upgrading to a newer version or migrating to a different framework can be a complex and resource-intensive task. It may involve rewriting significant portions of the application and require a substantial investment of time and effort.

Similarly, the choice of a particular database technology can result in a lock-in. If the selected database technology becomes obsolete or incompatible with evolving requirements, migrating to a different database system may pose significant challenges.

Since every application has some level of lock-in, this fear against serverless architecture is not entirely valid.

Multi-cloud in Serverless Thinking

Multi-cloud is generally a lot of people’s answer to vendor lock-in but according to Corey Quinn, a well-known cloud economist, and advocate, often refers to multi-cloud as a “worst practice.” Quinn argues that organizations should focus on optimizing their cloud deployments within a single provider rather than trying to spread workloads across multiple providers. He believes that the benefits of specialization, vendor-specific services, and cost optimization can be maximized by committing to a single cloud provider.

Moreover, finding suitable human resources to effectively manage a single cloud platform is hard enough in itself, and the difficulty will intensify if it comes to managing multiple cloud platforms simultaneously.

And if you are concerned about resiliency it’s important to recognize that cloud platforms for example AWS rarely experience complete outages across all the regions simultaneously. While specific Availability Zones (AZs) within a cloud provider’s infrastructure may face downtime, adopting a multi-az strategy can help address resiliency challenges. You can build resiliency starting from instances > AZ > Regions as you progress through different levels of abstraction in your cloud architecture.

Cold Start in Serverless

Cold starts refer to the initial delay experienced when a function is invoked(say lambda). It occurs when the serverless platform provisions and initializes resources for execution.

Cold starts have minimal impact on occasional or low-latency-tolerant applications and on event-driven background processing applications, but they can introduce delays in latency-sensitive or real-time scenarios.

Mitigation strategies like warm-up functions, scheduled invocations, and provisioned concurrency help reduce the occurrence and impact of cold starts.

Towards the end let’s see when only serverless thinking might not be the best solution.

Where All Serverless may not be the best solution

Let’s say you have an API request and you are executing a lambda function to address each individual request. And for this api, if you have consistent and predictable very high traffic then it will get expensive to execute lambda functions for each api request. It may be more cost-effective to provision and manage dedicated resources in a traditional infrastructure setup say an EC2 or container.

With serverless, the pay-as-you-go pricing model can become less favourable for sustained high workloads where you have a simple api but with massive volume. One example of such API is collecting user impressions from the app where you have millions of active users and hundreds of impressions per user to collect in a certain duration.

To make the right choices in such scenarios, you need to perform a cost-benefit analysis of using AWS API gateway with lambda functions versus managing your own instances, containers, scaling, and required human resources for overall handling.

Another instance when serverless might not be an ideal fit could be when your application cannot tolerate cold starts and requires ultra-low latency. While many applications can tolerate occasional cold start scenarios, certain use cases, such as gaming platforms that demand extremely low latency in the sub-millisecond range, may encounter challenges with the serverless approach using Lambda. In such cases, relying solely on serverless architecture could pose difficulties in meeting the stringent latency requirements.

That’s a wrap! I hope this article helped you learn about serverless architecture thinking. Stay tuned for more informative articles like this.

References:

AWS Serverless Hero: https://theburningmonk.com/

https://www.lastweekinaws.com/blog/multi-cloud-is-the-worst-practice/